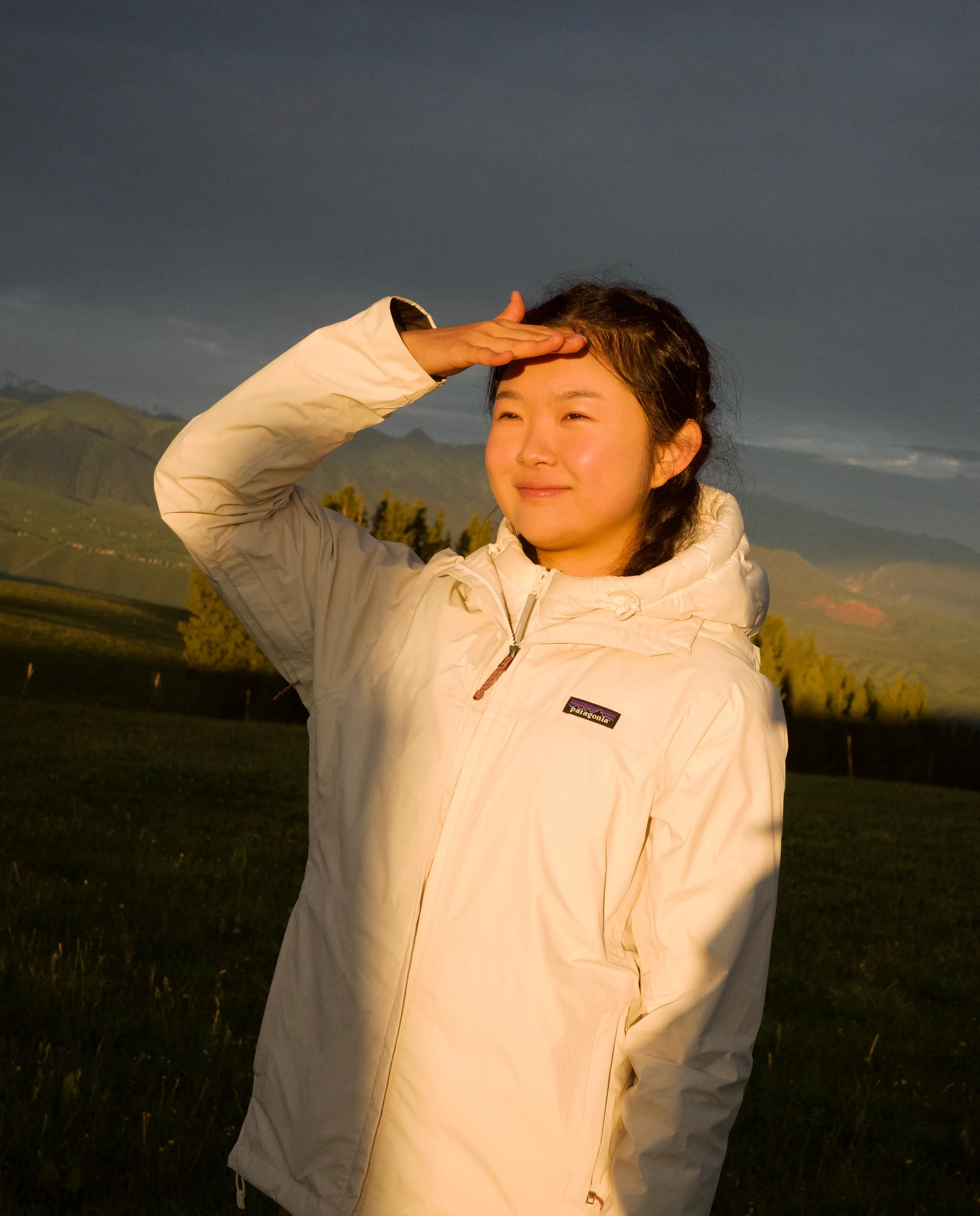

Yijia Dai

CS PhD @ Cornell. 问心无愧.

👋 ▫️ 👍 👀 ❕

Learning is key for any type of intelligence. I love thinking about how human learns, and how to translate to machines at scale. Questions like How does sequential ordering of knowledge affect human learning? intrigue me.

An ambitious goal that I have is creating AI systems to discover new knowledges in the real world that benefits our daily lives.

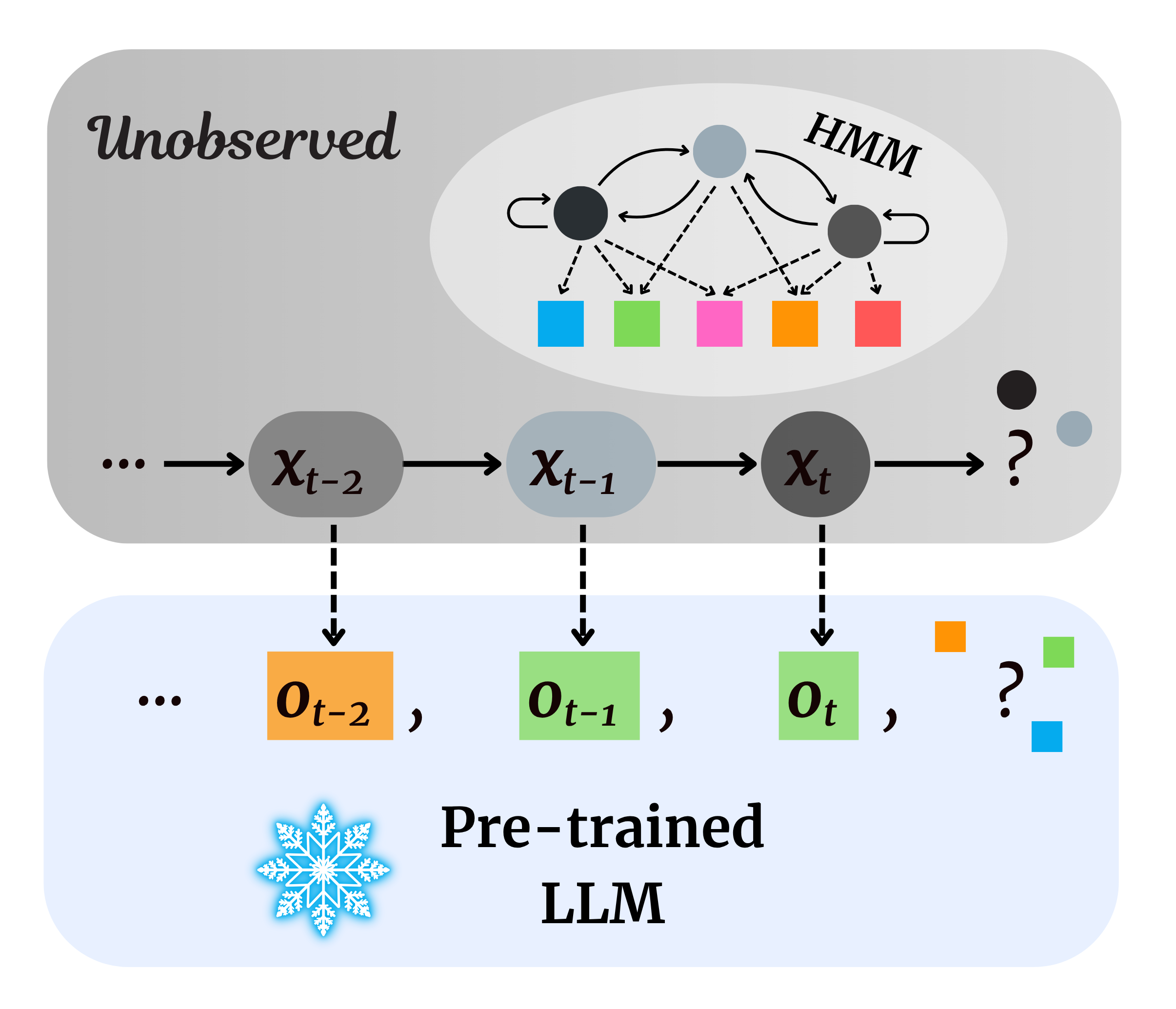

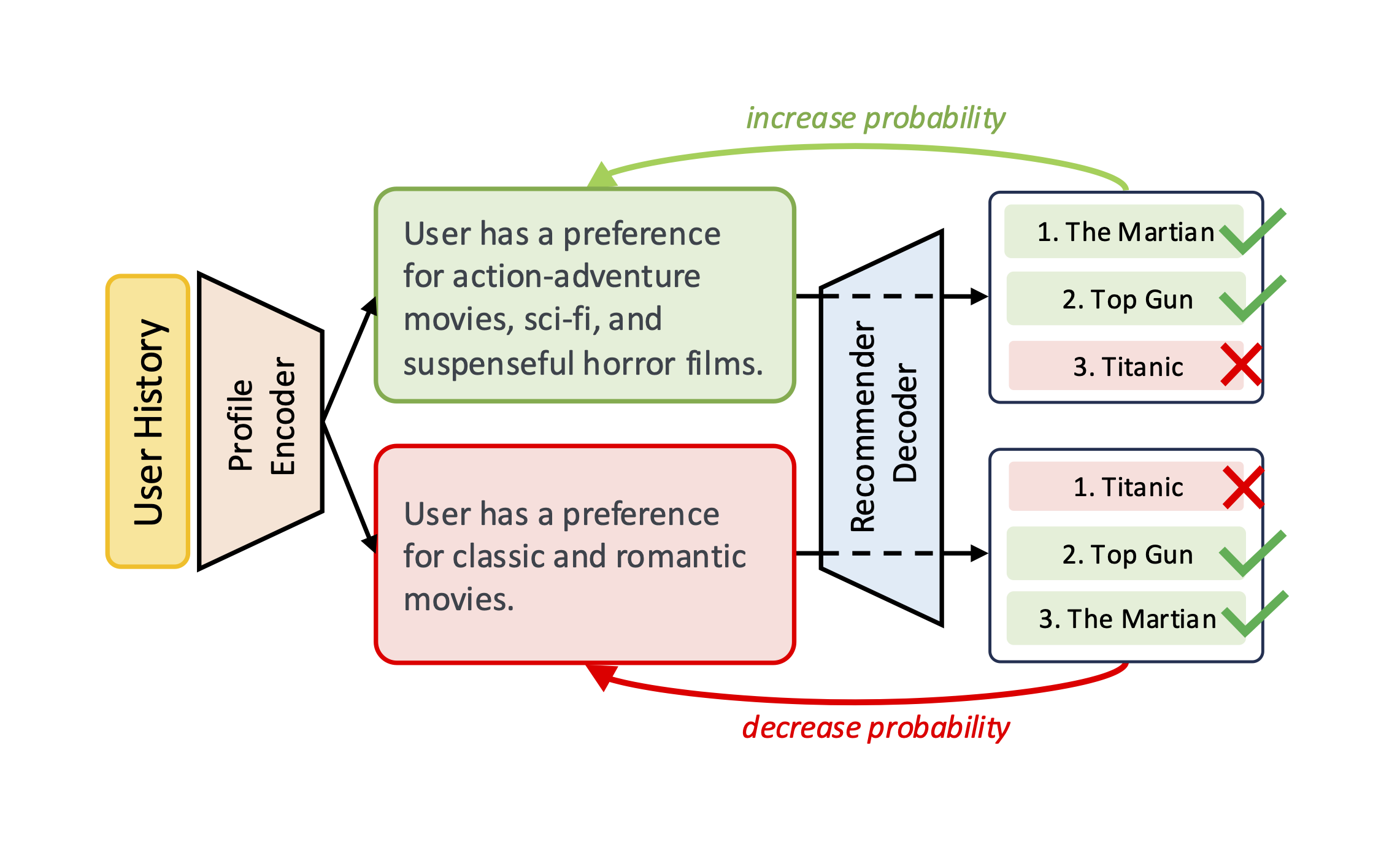

I’m fortunate to be advised by Prof. Sarah Dean and Prof. Jennifer Sun at Cornell. Currently, I study reinforcement learning and LLMs for scientific discovery. Sample-efficient low-rank representation learning for dynamical and unobserved states, and LLMs for understanding decision trajectories are examples of what I work on.